AI – Artificial Intelligence has run into the expressway and poised as one of the most promising technologies in this decade. Currently, AI has become very sophisticated and been demanding more computing power for realizing better algorithms. The carrier of AI, silicon chips is the bottle neck of providing the ultimate computing power. AS demanded by AI tasks, new hardware that is specially designed for specialized AI software, is needed for quick training of the neural network and reduce the power consumption. The Moore’s Law of Semiconductor industry has driven the process to its limit. We cannot reduce the chip size and put more transistors in the silicon die because quantum tunneling effect prevails when the device gate shrinks to below 5nm.The technology behind AI can be a critical role in driving the economy to grow as AI has been eyed as the pivot part of many products, such as self-driving cars, robotics, smart homes, industrial controls and many others. AI technology consists of Algorithms, processing power and data, among which the computing power depends on the hardware, AI chips. Currently, there are hundreds of companies are involved in the manufacturing and research of AI chips. As AI turns out the mainstream technological trend, more and more companies will join this war for the AI market. The AI chips are still in their starting stage, we have not seen any all-in-one type of devices. AI chips are application oriented thus we see many different types of AI chips in the market for various applications, which include GPU (Graphic Processing Unit), NPU (Neural Processing Unit), TPU (Tensor Processing Unit), Amazon AI chip for Alexa home assistant, Apple AI chip for Siri and FaceID and Tesla AI chip for self-driving electric cars. From all of those in the market, let’s take a look at seven of them.

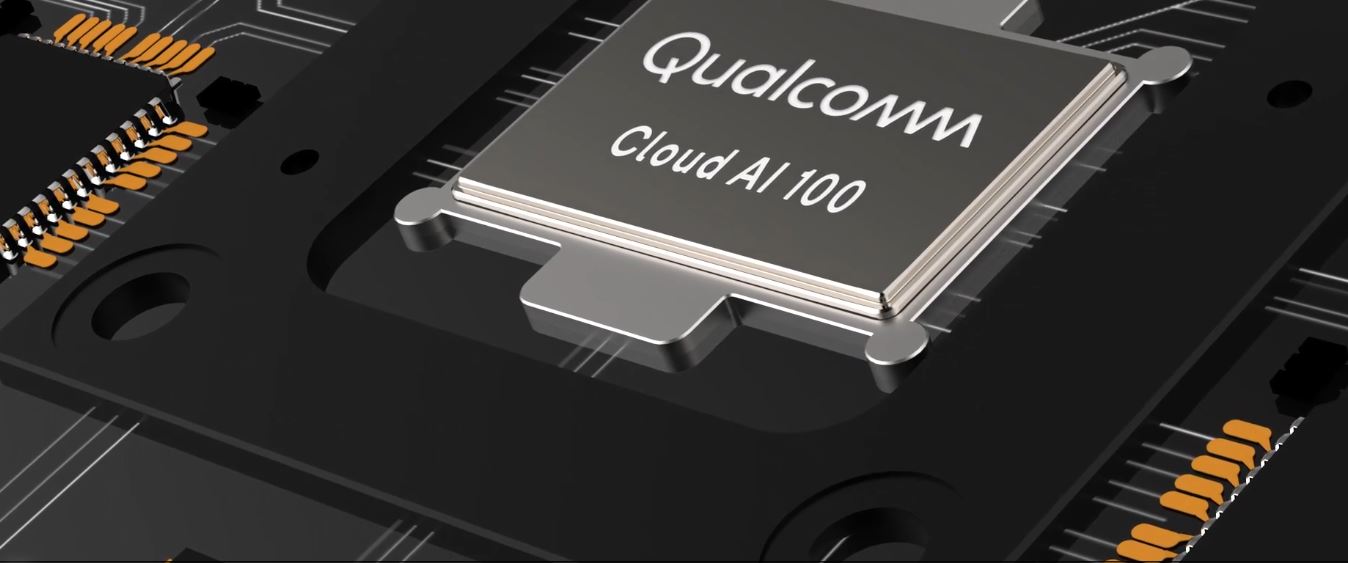

Qualcomm Cloud AI 100

Qualcomm Inc. launched the new Cloud AI 100 chip that is capable of translating audio input into text-based requests with an AI algorithm trained by massive amount of data. The Qualcomm Cloud AI 100 is considered as the solution that is designed to meet cloud AI inferencing needs for datacenter providers. The AI chip is built from the ground up to help accelerate AI experiences, providing a turn-key solution that addresses the most important aspects of cloud AI inferencing – including low power consumption, scale, process node leadership, and signal processing expertise, which facilitates the ability of datacenters to run inference on the edge cloud faster and more efficiently.

- Low power consumption

- 10x performance improvement over available AI inference accelerator solutions

- Process node leadership

- Built from the ground up with 7nm process node, performance and power leadership

- Scale

- 700+ million Qualcomm Snapdragon chipset shipped per year

- Billions of discrete chipsets per year

- Work with over 30 different fabs across the world

- Signal Processing expertise

- Power-efficient signal processing expertise across major areas:

- Artificial Intelligence

- eXtended Reality

- Camera

- Audio

- Video

- Gestures

- Power-efficient signal processing expertise across major areas:

Apple Bionic A13

Apple Bionic A13 Processor in iPhone 11, 11 Pro and 11 Pro Max boosts new Apple iPhones performance by at least 20% and reduces power consumption by 30% over the last generation. Apple Bionic A13 are fabricated on TSMC’s N7P process. It’s integrated with 8.5 billion transistors and features two Lightning cores at 2.65 GHz and four Thunder high-efficiency cores at 1.8 GHz. Besides these 6 cores, it also contains a quad-core GPU and an octa-core neural engine that is dedicated to the hardware accelerators for machine learning that makes the chip six time faster on matrix multiplication. Apple Bionic A13 has two built-in machine learning accelerators that can handle one trillion operations per second.

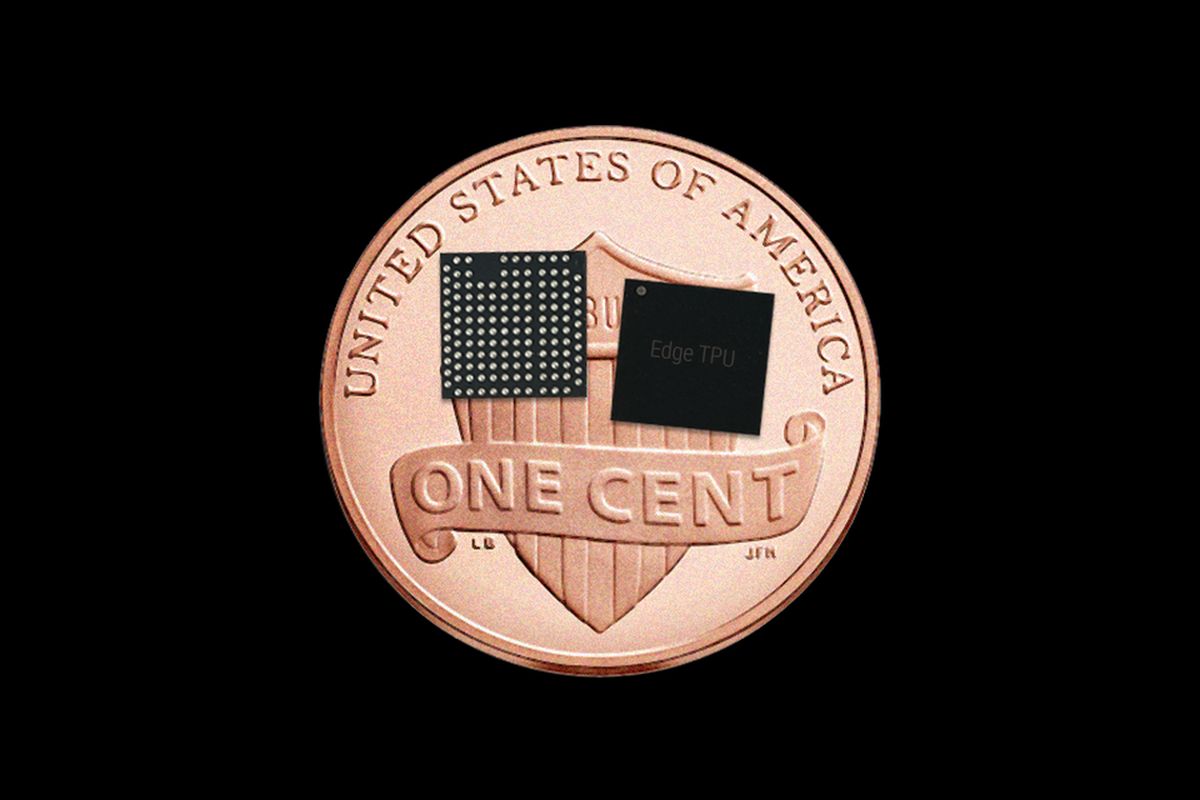

Google Tensor Processing Units TPU

Google’s Edge TPU (Tensor Processing Units) is made to perform machine learning in miniature devices, like IoT (Internet of Things) devices. This AI chip is capable of carrying out inference based on the machine learning algorithm trained by datasets. The new Google Edge TPU chips are even smaller compared to a US Penny.

Tensor Processing Units (TPUs) are Google’s custom-developed application-specific integrated circuits (ASICs) used to accelerate machine learning workloads. TPUs are built on the foundation of Google’s expertise in machine learning. The TPUs that Google makes for cloud enables us to run machine learning workloads on Google’s TPU accelerator hardware using TensorFlow. Google Cloud TPU is designed for maximum performance and flexibility to help researchers, developers, and businesses to build TensorFlow compute clusters that can leverage CPUs, GPUs, and TPUs. High-level TensorFlow APIs help you to get models running on the Cloud TPU hardware.

The TPU resources accelerate the performance of linear algebra computation, which is used heavily on machine learning applications. TPUs minimize the time-to-accuracy when training large, complex neural network models that can converge in hours now on TPUs instead of weeks on other hardware platforms.

Microsoft Project Brainwave – demonstrated using Intel’s 14 nm Stratix 10 FPGA

Microsoft Project Brainwave is a cloud-based deep learning platform for real-time AI inference in the cloud and on the edge. A soft Neural Processing Unit (NPU) that is based on a FPGA (Field Programmable Gate Array) like Intel 14 nm Stratix 10 is used to accelerate Deep Neural Network (DNN) inferencing. The applications of Project Brainwave outcomes include computer vision and natural language processing. Project Brainwave is transforming computing by augmenting CPUs with an interconnected and configurable compute layer composed of programmable silicon.

According to Microsoft, the FPGA configuration achieved more than an order of magnitude improvement in latency and throughput on RNNs (Recurrent Neural Networks) for Bing, with no batching. The software overhead and complexity are greatly improved due to the real-time AI processing and ultra-low latency without batching required. Microsoft Project Brainwave has also been applied on the cloud with Azure Machine Learning and the edge with Azure DataBox Edge.

Samsung Exynos 9820 SoC

Samsung has developed its NPU (Neural Processing Unit) based AI chips for deep learning algorithms which are the core element of artificial intelligence (AI) as this is the process that can be utilized by computers to think and learn as a human being. Samsung’s Exynos 9820 is built to maximize intelligence on the go to offer powerful AI capabilities with NPU and the performance through tri-cluster CPU. This AI chip includes On-Device AI lightweight algorithms that directly compute and process data from within the device itself. The latest algorithm solution is over 4 times lighter and 8 times faster than existing algorithms.

The Exynos 9820 pushes the limit of mobile intelligence with an integrated Neural Processing Unit (NPU), which specializes in processing artificial intelligence tasks. It allows the processor to perform AI-related functions seven times faster than its predecessor. From enhancing photos to advanced AR (Augmented Reality) features, the Exynos 9820 with NPU expands AI capabilities of mobile devices.

The Samsung Exynos has two 4th generation custom CPUs, two Cortex-A75 cores and four Cortex-A55 cores. This tri-cluster architecture with intelligence task scheduler boosts multi-core performance by 15 percent compared to the last generation. The 4th generation custom CPU with enhanced memory access capability and cutting-edge architecture design improves single core performance by up to 20 percent or boosts power efficiency by up to 40 percent.

Huawei Ascend 910 AI Processor

Huawei Ascend 910 boasts the world’s most powerful AI processor. Ascend 910 delivers 256 Teraflops @ FP16 for half-precision floating point operations and 512 Teraflops @ INT8 for integer (INT8) precision calculation. Also, Ascend 910 consumes only 310W at full load. The performance improvement is due to Huawei’s own Da Vinci architecture. Ascend 910 is a high-integration SoC processor. Inaddition to the Da Vinci AI cores, it integrates cPUs, DVPPm and Task Scheduler. It self-manages to make full use pf its high computing power. At the same time, Huawei introduced its MindSpore, all-scenario AI computing framework that supports development for AI applications. In a typical training session based on ResNet-50, the combination of Ascend 910 and MindSpore is about two times faster at training AI models than other mainstream training cards using TensorFlow.

Baidu Kunlun AI Chip based on Baidu XPU Neural Processing Architecture

Baidu Kunlun AI chip is designed for cloud, edge and AL applications. Samsung Foundry will mass produce Kunlun AI chips with 14 nm process and use the Interposer-Cube 2.5D packaging structure in early 2020. The Baidu Kunlun AI accelerator is based on Baidu’s own XPU processor architecture that includes thousands of small cores for many cloud or edge applications. According to Baidu, Kunlun boasts 512 Gbps memory bandwidth with two HBM2 (High Bandwidth Memory 2) memory packages and provides up to 260 TOPS at 150W power consumption. Kunlun will carry Baidu’s natural language processing framework, Ernie to process language boasting three times faster than traditional GPUs.